\(\Im\in\int\int\)

behavioral cloning

This document covers the foundations of behavioral cloning via imitation learning. We will start off by formalizing the supervised learning problem, and then proceed to cover the class of linear models — machine learning's primary workhorses. In the next document we compare non-parametric methods (kernel machines, decision trees) with deeper parametric methods (deep neural networks), and why the latter ultimately won out (captured by the bitter lesson).

supervised learning

The goal of supervised learning is for some learning algorithm \(A\) to approximate \( h = P(\boldsymbol{y}|\boldsymbol{x}) \) by inducing some hypothesis \(\hat{h}: \mathcal{X} \subseteq \mathbb{R}^{d_{in}} \mapsto \mathcal{Y} \subseteq \mathbb{R}^{d_{out}} \) from input-output vector pairs \(\mathcal{d}=\{(\boldsymbol{x}^{(0)},\boldsymbol{y}^{(0)}),...,(\boldsymbol{x}^{(n)},\boldsymbol{y}^{(n)})\}\). Approximating \(P(\boldsymbol{y}|\boldsymbol{x})\) is called discriminative modeling. The notion of supervision reflects the availability of true labels, and is also often referred to as imitation learning, behavioral cloning, or pattern recognition. When the problem admits \(\mathcal{Y} \subseteq \mathbb{N}\), we call the model produced by the learner a classification model. When \(\mathcal{Y} \subseteq \mathbb{R}\), a regression model. When \(\mathcal{Y} \subseteq \mathbb{R}^{m}\), a generative model. All three can be thought of as predictive models.

Coming up with good predictors requires quantifying the notion of "better", which is captured with a loss function \(l: \mathcal{Y} \times \mathcal{Y} \mapsto \mathbb{R}^+\). These loss functions effectively operationalize what it means to be a more efficient learner by evaluating the distance between the predicted answer \(\boldsymbol{\hat{y}}^{(i)}\) and the expected \(\boldsymbol{y}^{(i)}\). Ideally we hope to enumerate through every point in the distribution to obtain what is called the population loss \(\mathbb{E}_{(x^{(i)},y^{(i)}) \sim P}[l(\boldsymbol{\hat{y}}^{(i)}, \boldsymbol{y}^{(i)})]\) but this is uncomputable in practice since \(P\) is inaccessible. The good news is that we can approximate the population loss with sample loss \( \frac{1}{n} \sum_{i=1}^n l(\boldsymbol{\hat{y}}^{(i)}, \boldsymbol{y}^{(i)}) \), and since we are averaging a random variable, we know by the weak law of large numbers that it converges to the population loss in expectation. Thus, we approximate the population loss (along with the resulting best predictor \(h^*\)) by solving an optimization problem that looks like: \begin{align*} \hat{h} &= \underset{h \in \mathcal{H}}{\min} \mathcal{L(\hat{\boldsymbol{y}}^{(i)},\boldsymbol{y}^{(i)})} \\ &= \underset{h \in \mathcal{H}}{\min}\, \frac{1}{n} \sum_{i=1}^n l(\hat{\boldsymbol{y}}^{(i)},\boldsymbol{y}^{(i)}) \\ \end{align*}

For the class of hypothesis that can be summarized with parameters (called parameterized models), we can further specify the optimization problem above as one of the hypothesis' parameters , since they characterize everything about the predictor \(h_{\boldsymbol{\theta}}(\boldsymbol{x}^{(i)})\): \begin{align*} \hat{\boldsymbol{\theta}} &\in \underset{\boldsymbol{\theta} \in \Theta}{\operatorname{argmin}} \mathcal{L(h_{\boldsymbol{\theta}}(\boldsymbol{x}^{(i)}),\boldsymbol{y}^{(i)})} \\ &= \underset{\boldsymbol{\theta} \in \Theta}{\operatorname{argmin}} \frac{1}{n} \sum_{i=1}^n l(h_{\boldsymbol{\theta}}(\boldsymbol{x}^{(i)}),\boldsymbol{y}^{(i)}) \\ \end{align*}

opt:MLE.

gen:Overfitting, generalization, regularization

- Representation: what is the class of hypothesis \(\mathcal{H}\) we should choose?

- Optimization: what loss function do we use, and how can we efficiently solve the resulting optimization problem?

- Generalization: how well does the predictor perform on unseen instances?

The first class of models we will cover are linear models, which are always the first representation one should explore, regardless of domain — analysis approximates complicated functions with linearities, and linear algebra limits transformations to linear mappings. In machine learning we will do the same.

classification

The problem of classification is to find some \(\hat{h}: \mathbb{R}^{d} \mapsto \{0, 1, ...,c\} \subseteq \mathbb{N} \), given \(\mathcal{d}=\{(\boldsymbol{x}^{(0)},\boldsymbol{y}^{(0)}),...,(\boldsymbol{x}^{(n)},\boldsymbol{y}^{(n)})\}\). When \(c=2\), we further classify the problem as binary classification, and if \(c>2\), we call it multi-class classification.

k-nearest neighbors

naive bayes

The representation we will take a look at for classification problems is called the naive bayes model.

optimization

logistic regression

The second representation we will take a look at for classification is called logistic regression (although a better name for it would be sigmoidal classifcation).

assumptions of NB vs LR. when is one the better. for our purposes though, LR we need because NN:LOL

regression

linear regression

The problem of regression is to find some \(\hat{h}: \mathbb{R}^{d} \mapsto \mathbb{R} \), given \(\mathcal{d}=\{(\boldsymbol{x}^{(0)},\boldsymbol{y}^{(0)}),...,(\boldsymbol{x}^{(n)},\boldsymbol{y}^{(n)})\}\).

linear regression

The assumption that linear regression makes is that the output and input are linearly associated: \begin{align*} \hat{y}^{(i)} &= \theta_0 x_{0}^{(i)} + \theta_1 x_{1}^{(i)} + \cdots + \theta_n x_{n}^{(i)} \\ &= \sum_{j=0}^{n} \theta_i x_{j}^{(i)} \\ &= \boldsymbol{\theta}^\top \boldsymbol{x}^{(i)} \end{align*} By convention, \(x_0 := 1\), which turns the linear transformation into an affine one. This allows the model to bias the relationship in the absence of all other inputs. We will denote the model with \(h_{\theta}(\boldsymbol{x}^{(i)}) := \boldsymbol{\theta}^\top \boldsymbol{x}^{(i)}\) to emphasize that the model is parameterized by \(\theta\). The last thing we want to add to this model is an error term to capture uncertainty in the model or data so that \[ h_{\theta}(\boldsymbol{x}^{(i)}) := \boldsymbol{\theta}^\top \boldsymbol{x}^{(i)} + {\epsilon}^{(i)} \tag{1} \]

todo: https://x.com/docmilanfar/status/1839878337012858984Now, recall that the original goal is to approximate \( P(y|x) \) — and we can do so with maximum likelihood estimation (MLE) by choosing parameters that make the data most likely with \(P(y|x; \theta) \). However, the problem is that we don't have access to the conditional distribution! One assumption to get around this problem is to assume the existence of errors that are in the form of random gaussian noise: \(\epsilon^{(i)} \overset{\text{iid}}{\sim} \mathcal{N}(\mu = 0, \sigma^2 = k)\), where \(\mu \) is 0 and \(\sigma^2 \) is some constant k. If \(\mu \) is not 0, then this tells you there's some unmodeled feature in the model, and if \(\sigma^2 \) is unknown, then we can ___. The reasoning behind this assumption is fundamentally a central limit theorem argument, which is usually not true in practice, but is useful in unblocking our modeling.

Then, rearranging equation (1), we get \(\epsilon^{(i)} = y^{(i)} - \boldsymbol{\theta}^\top \boldsymbol{x}^{(i)}\), and thus \(y^{(i)} - \boldsymbol{\theta}^\top\boldsymbol{x}^{(i)} \overset{\text{iid}}{\sim} \mathcal{N}(\mu=0, \sigma^2=k)\). Since the \(\boldsymbol{\theta}^\top\boldsymbol{x}^{(i)}\) term is constant (\(\boldsymbol{\theta}\) is constant under MLE), we get the fact that \(y^{(i)} \overset{\text{iid}}{\sim} \mathcal{N}(\boldsymbol{\theta}^\top\boldsymbol{x}^{(i)}, \sigma^2=k)\), which is the same as: \[ p(y^{(i)}|\boldsymbol{x}^{(i)}; \boldsymbol{\theta}) = \frac{1}{\sqrt{2\pi k}} \exp\left(-\frac{(y^{(i)} - \boldsymbol{\theta}^\top\boldsymbol{x}^{(i)})^2}{2k}\right) \tag{2} \]

Finally, we can estimate the parameters to our model via MLE where \(L(\boldsymbol{\theta}) = \prod_{i=1}^n p(y^{(i)}|\boldsymbol{x}^{(i)}; \boldsymbol{\theta})\) due to ___ being iid, and so \[ \begin{align*} LL(\boldsymbol{\theta}) &= \log L(\boldsymbol{\theta}) \\ &= \sum_{i=1}^n \log p(y^{(i)}|\boldsymbol{x}^{(i)}; \boldsymbol{\theta}) \\ &= \sum_{i=1}^n \log \left(\frac{1}{\sqrt{2\pi k}} \exp\left(-\frac{(y^{(i)} - \boldsymbol{\theta}^\top\boldsymbol{x}^{(i)})^2}{2k}\right)\right) \\ &= -\frac{1}{2k} \sum_{i=1}^n (y^{(i)} - \boldsymbol{\theta}^\top\boldsymbol{x}^{(i)})^2 + C \end{align*} \] and then maximize the log likelihood: \[ \begin{align*} \hat{\boldsymbol{\theta}} &\in \underset{\boldsymbol{\theta}}{\operatorname{argmax}} \ LL(\boldsymbol{\theta}) \\ &= \underset{\boldsymbol{\theta}}{\operatorname{argmax}} \ -\frac{1}{2k} \sum_{i=1}^n (y^{(i)} - \boldsymbol{\theta}^\top\boldsymbol{x}^{(i)})^2 + C \\ &= \underset{\boldsymbol{\theta}}{\operatorname{argmin}} \ \sum_{i=1}^n (y^{(i)} - \boldsymbol{\theta}^\top\boldsymbol{x}^{(i)})^2 \end{align*} \]

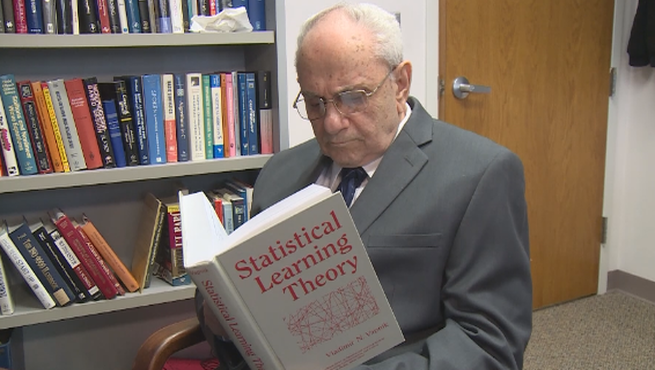

References- Shai Shalev-Shwartz, Shai Ben-David 2014

- Hastie, Tibshirani, Friedman 2017

- Hardt, Recht 2022

- Murphy 2022, 2023

- Ng, Ma 2023